http://www.summer10.isc.uqam.ca/page/docs/readings/FITCH_Tecumseh/Fitch_Chp29_refs%20_updated.pdf

Musical protolanguage: Darwin's theory of language evolution revisited

W. Tecumseh Fitch

Dept. of Cognitive Biology

University of Vienna

He says go for it - it's good to go.

Together, results suggest that the interaction of music and language syntax processing depends on rhythmic expectancy, and support a merging of theories of music and language syntax processing with dynamic models of attentional entrainment.

Specifically, the Dynamic Attending Theory (DAT) posits a mechanism by which internal neural oscillations, or attending rhythms, synchronize to external rhythms (Large and Jones, 1999). In this entrainment model, rhythmic processing is seen as a fluid process in which attention is involuntarily entrained, in a periodic manner, to a dynamically oscillating array of external rhythms, with attention peaking with stimuli that respect the regularity of a given oscillator (Large and Jones, 1999; Grahn, 2012a). This process of rhythmic entrainment has been suggested to occur via neural resonance, where neurons form a circuit that is periodically aligned with the stimuli, allowing for hierarchical organization of stimuli with multiple neural circuits resonating at different levels, or subdivisions, of the rhythm (Large and Snyder, 2009; Grahn, 2012a; Henry et al., 2015). One piece of evidence in support of the DAT comes from Jones et al. (2002), in which a comparative pitch judgment task was presented with interleaving tones that were separated temporally by regular inter-onset intervals (IOIs) that set up a rhythmic expectancy. Pitch judgments were found to be more accurate when the tone to be judged was separated rhythmically from the interleaving tones by a predictable IOI, compared to an early or late tone that was separated by a shorter or longer IOI, respectively. The temporal expectancy effects from this experiment provide support for rhythmic entrainment of attention within a stimulus sequence.

interesting.

Although main effects of language and rhythm were observed, there was no significant interaction. An explanation for this lack of interaction could be that removing the factor of music resulted in the implemented violations no longer being sufficiently attention-demanding to lead to an interaction between the remaining factors, resulting in parallel processing of language and rhythm.

So as I posted in my recent upload - it's more about frequency and phase than timing.

Although syncopated rhythm is a key secret to this.

The problem with science analyzing this issue is that they don't understand music properly!!

Interpreting the fossil evidence for the evolutionary origins of music

but the out-of-proportion evolution of the cerebellum and pre-frontal cortex may be relevant. It suggested that protomusic was a behavioural feature of Homo ergaster 1.6 million years ago. Protomusic consisted of entrained rhythmical whole-body movements, initially combined with grunts. Homo heidelbergensis, 350 000 years ago, had a brain approaching modern size, had an enlarged thoracic canal which indicates that they had modern-style breathing control essential for singing, and had modern auditory capability, as is evident from the modern configuration of the middle ear. The members of this group may have been capable of producing complex learned vocalizations and thus modern music in which voluntary synchronized movements are combined with consciously manipulated melodies.

The Electrophysiological Correlates of Rhythm and Syntax in Music and Language

These data patterns confirm that rhythm affects the shared cognitive resources between music and language, and generally consistent with both the SSIRH (Patel, 2003) and DAT (Large & Jones, 1999).

too bad they can't get past this.

The Effects of Music on Auditory-Motor Integration for Speech:

. It was found that musical priming of speech, but not simultaneous synchronized music and speech, facilitated speech perception in both the younger adult and older adult groups. This effect may be driven by rhythmic information.

The talking species - a whole book!

searches musi

Emotion communication in animal vocalizations, music and language: An evolutionary perspective

Piera Filippi and Bruno Gingras .

OK

599 matches!

before turning our attention to acoustic universals in emotion processing in animal vocalizations, human communication (including language), and finally, music. Building on recent findings on cognitive processes and mechanisms underpinning emotional processing shared by these three domains, and on the adaptive role of the ability for emotion communication, we hypothesize that this ability is an evolutionary precursor of the ability for language.

yes exactly!!

There are your two claims: First claim Debunked (see above):

1) if music was the first way of communicating we would see it in nature

Indeed, to a large extent, emotions are expressed through shared acoustic correlates in music and language (Coutinho & Dibben, 2012; Juslin & Laukka, 2003). For example, both music and speech express heightened arousal through an increase in either tempo or loudness (Ilie & Thompson, 2006). Moreover, researchers have observed that smaller melodic pitch intervals tend to be associated with sadness in both speech and music (Bowling, Sundararajan, Han, & Purves, 2012; Curtis & Bharu-cha, 2010). However, one notable difference between music and speech is that whereas high-pitched speech is associated with increased arous-al, there is no clear association between pitch height and music-induced arousal (Ilie & Thompson, 2006).

Well that's because Western music doesn't understand what causes Frisson but we know it's the vagus nerve activation and so most likely from a nonlinear feedback of higher frequency overtones causing a subharmonic amplification as ELF sound. But I'm sure that concept is way beyond the "music" choice of these musicologists. haha.

For instance, Ma and Thompson (2015) showed that changes in acoustic attributes such as frequency, intensity, or rate (i.e., speed relative to the original version of the stimulus) affect the subjective emotional evaluation of environ-mental sounds including human and animal-produced sounds as well as machine and natural sounds in much the same way as with music and speech. These findings suggest that the subjective emotional evaluation of auditory stimuli may have an even more general basis than previously thought, insofar as basic attributes such as frequency and intensity are considered.

right and TIMBRE is a big factor as well.

The "amusia" study doesn't really interest me because what is "music" - typically as defined by Western tuning!

Although amusia is mostly conceived of as a musical disorder, it is now generally accepted that individuals with amusia show a wide range of deficits in speech processing in laboratory conditions due to a domain-general impairment in pitch processing. Thus, the reported deficits regarding phonology, prosody and syntax are pertinent to our understanding of how music and speech are processed in the brain and support speculations about a common origin of music and language.

Second Claim Debunked (see above)

and 2) that people who can’t appreciate music would also experience trouble with language and logic.

A Joint Prosodic Origin of Language and Music

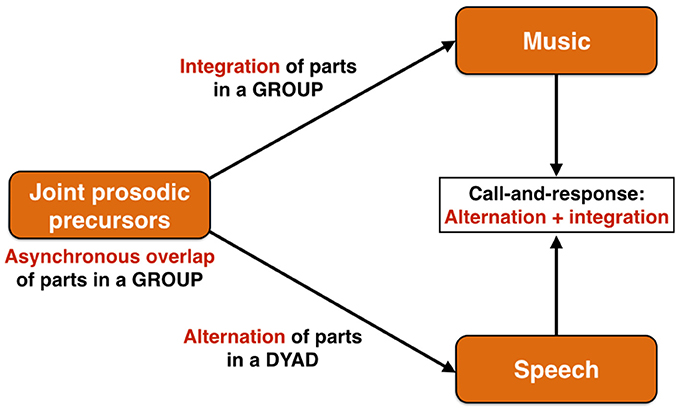

I present here a model for a joint prosodic precursor of language and music in which ritualized group-level vocalizations served as the ancestral state. This precursor combined not only affective and intonational aspects of prosody, but also holistic and combinatorial mechanisms of phrase generation. From this common stage, there was a bifurcation to form language and music as separate, though homologous, specializations. This separation of language and music was accompanied by their (re)unification in songs with words.

(1) music would evolve to achieve a tight temporal integration of parts through the evolution of the capacities for both rhythmic entrainment and vocal imitation, and that (2) speech would evolve to achieve an alternation of parts, as occurs in standard dialogic exchange (Figure 3). This functional and structural bifurcation reflects the fact that music retains the precursor's primary function as a device for group-wide coordination of emotional expression, most likely for territorial purposes, while language evolves as a system for dyadic information exchange in which an alternation of parts, rather than simultaneity, becomes the most efficient means of carrying out this exchange. These distinctive communicative arrangements of music and speech come together in a performance style that is found quite widely across world cultures, namely call-and-response singing (Figure 3), where the call part is informational and is textually complex (typically performed by a soloist, as in speech) and the response part is integrated and textually simple (typically performed by a chorus, as in music). Call-and-response is an alternating (turn-taking) exchange, but one between an individual and a group, rather than two individuals.

Now this is what I was getting at!! Syncopated rhythms...

polyrhythms in brain and music

Oh I remember when this study got published - I cited this before but I forgot about it.

yep.

Advances in the methodology of intonational analysis have made it possible to adequately describe and more deeply understand the principles that govern the tonal organization of non-Western types of music—including those that are based on timbre rather than pitch. This approach can be effectively applied to the analysis of both ethnological and developmental data—to identify common patterns of ontogenetic and phylogenetic development.

Again that depends how you define "pitch."

Where did language come from? Connecting sign, song, and speech in hominin evolution

So with a critical eye I consider Michael Corballis’s most recent expression of his ideas about this transition (2017’s The Truth About Language: What It Is And Where It Came From). Corballis’s view is an excellent foil to mine and I present it as such. Contrary to Corballis’s account, and developing Burling’s conjecture that musicality played some role, I argue that the foundations of an evolving musicality (i.e., evolving largely independently of language) provided the means and medium for the shift from gestural to vocal dominance in language. In other words, I suggest that an independently evolving musicality prepared ancient hominins, morphologically and cognitively, for intentional articulate vocal production, enabling the evolution of speech.

Oh that should be very fascinating indeed!

so

so

wow - news to me!

yes that people would be "triggered" by this claim is not surprising. First of all if you read math professor Luigi Borzacchini's published article on incommensurability - he provides evidence for a "cognitive bias" against the music origins of Western science. Secondly Alain Connes is the ONLY scientist who realizes that the truth of music theory is noncommutative phase logic. So there is a math professor in Mexico who cites Connes and then further clarifies this connection to the PreSocratics. So Chomsky has not dismissed music at all - and Chomsky does know his linguistics - he basically invented the modern study of linguistics. To quote Chomsky:

"But what about music. There is some interesting work, trying to show that the basic properties of musical systems are similar to the structural properties of linguistic systems. Quite interesting work on that. Maybe that will come out."

thanks

Sure I call that the "Macro Heisenberg Effect" - umm actually Gregory Bateson's book, "Mind and Nature: A necessary unity" goes into this quite well - or engages with the topic well. So I realized we were DOOMED by 1996 so then logically I figured I should plan on what happens AFTER death. So I finished my master's degree through the African studies department based on the book "The Racial Contract" stating that Plato and Aristotle's Natural Law was not accurate and racist and rather a proper Pre-Socratic philosophy is actually nonwestern and African, as explained via music theory. So I had first sent my initial model to Zizek as a monograph entitled, "The Fundamental Force" - in 1996 - as I stated above - and so my Master's thesis has lengthy engagement with both Zizek AND Chomsky from this music theory perspective.

So Eddie Oshins coined the term "quantum psychology" but he based it on noncommutative phase logic - so he didn't appreciate his new discipline being co-opted with the wrong math. Oshins at Stanford Linear Accelerator Center also taught Wing Chun and so Oshins realized that the secret of Neigong is from noncommutative phase logic.

So he corroborated this same discovery I had made. In fact I realized that if I made this on my own then SOMEONE MUST have already discovered this. It took me quite a long time to discover Eddie Oshins as his website doesn't link to anywhere else and he died young of a heart attack. But I have corresponded with math professor Louis Kauffman who worked with Oshins at SLAC.

No comments:

Post a Comment